Despite market uncertainty, the data center sector has performed well in 2023, with the sector (including Digital Realty and Equinix) up 16.6 percent through the end of June vs. the S&P 500, which was up 15.9 percent. While the performance of the data center sector this year partially reflects investors’ becoming comfortable with the ability of operators to pass through higher power costs, the majority of the YTD rally has come on the heels of Nvidia’s earnings print in late May, which brought Artificial Intelligence (AI) to the forefront of the narrative within the investment community. To that point, since Nvidia reported earnings on May 24th, the data center sector is up 23.9 percent through the end of June vs. the S&P 500, which is up 8.1 percent, with the rally reflecting a recognition that data centers are required to deliver the benefits of AI. From an industry perspective, as both the hyperscalers and tech companies rush to win the AI race, the result has been a tremendous increase in demand for data centers, with both the number and size of data center deals increasing meaningfully. To that point, in 2022 we would have considered a large data center deal to be ~100 MW. This year, we have seen multiple 300+ MW data center deals signed, which speaks to the re-rating of deal sizes for the industry.

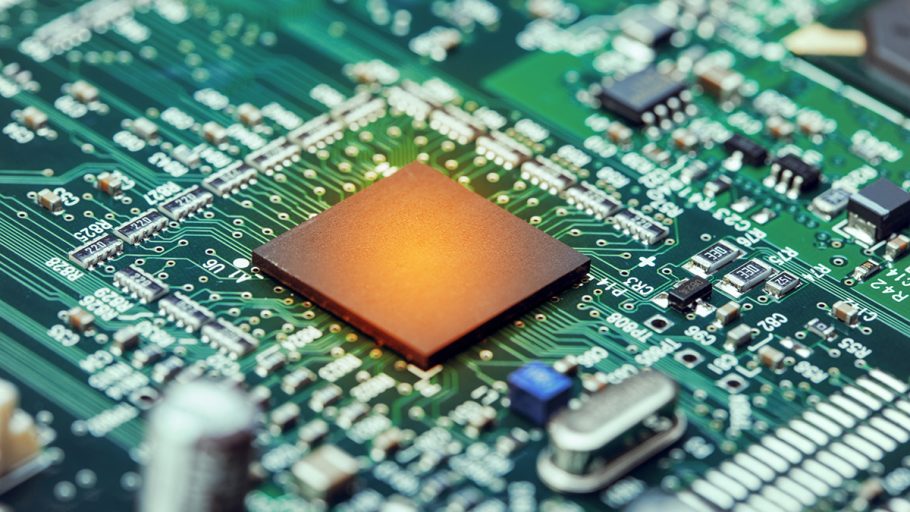

To understand what is driving this re-rating of demand, it is important to start at the server level. AI data center deployments leverage the compute capabilities of Graphics Processing Units (GPUs) vs. traditional enterprise deployments, which leverage Central Processing Units (CPUs). At a high level, GPUs are capable of performing more computations per unit than CPUs and are well suited to perform the intensive computations associated with AI. However, GPUs require significantly more power per unit than CPUs, which is driving higher power densities per cabinet. For context, traditional enterprise data center deployments typically run between 4–6 kW per cabinet, and hyperscale cloud deployments typically run at 14–17 kW per cabinet, whereas AI deployments run at 30+ kW per cabinet. As a result, AI deployments require more power per square foot, which in part explains the increase in deal sizes for AI deployments. However, given the greater compute capabilities of GPUs, the natural investor reaction is to think less servers (and in turn, less data center capacity) will be required to support the same number of computations. While this logic is true in theory, in practice we have observed an increase in the number of computations. This phenomenon is best summarized by Jevons Paradox, which states that when technological progress increases the efficiency with which a resource is used, the result is an increase in the consumption of said resource. In the case of AI and GPUs, the increased ability to perform computations drives an increase in the number of computations, further explaining why data center demand has increased meaningfully.

ABOUT THE AUTHOR

Michael Elias is a Senior Equity Research Analyst at TD Cowen. He covers the communications infrastructure sector, including data centers and content delivery networks (CDNs) and has been a member of the communications infrastructure team since 2017. Prior to joining TD Cowen, Elias worked as an equity analyst at Xanthus Capital Management. He received his B.S. in Industrial Engineering and Operations Research: Engineering Management Systems at Columbia University’s School of Engineering and Applied Sciences.