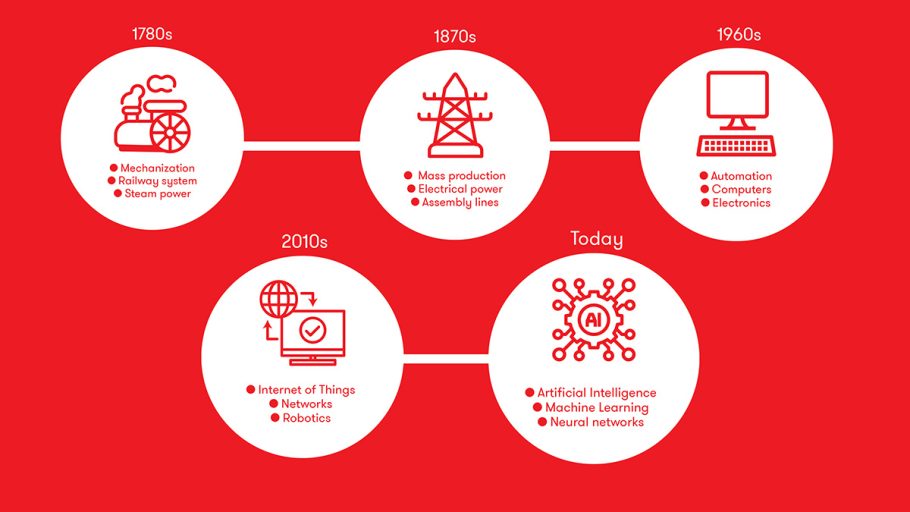

Although there’s a significant amount of hype related to artificial intelligence (AI), there’s no debate that AI is real and is already significantly reshaping a wide range of industries by driving innovation and efficiencies to previously unimaginable levels. However, as with any disruptive technology introduction—like steam engines, electricity, and the Internet—AI will come with unique challenges, opportunities, and unknowns.

AI infrastructure challenges lie in cost effectively scaling storage, compute, and network infrastructure while also addressing massive increases in energy consumption and long-term sustainability. To understand these challenges better, let’s delve into how AI impacts networks within the data centers hosting AI infrastructure and then work our way outwards to where data centers are connected over increasing distances.

AI Is Not a New Concept

AI, as a field of research, isn’t new; it was founded at a workshop at Dartmouth College back in 1956, where attendees became the first AI research pioneers. Although they were optimistic about the potential of AI, it took over six decades to become the reality it is today. This trajectory is because only recently have sufficient compute, storage, and network resources become available for AI infrastructure, which leverages today’s cloud model.

From The Edge Into The Cloud

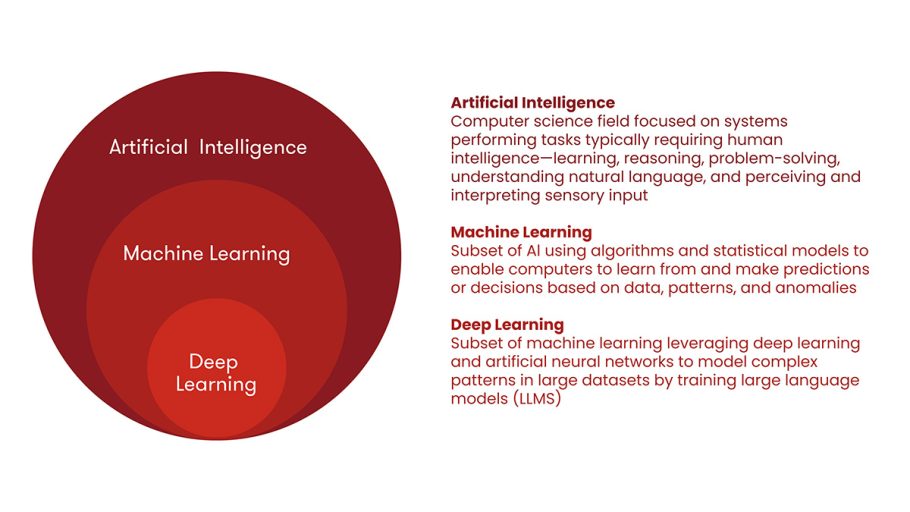

Large language models (LLMs) leverage deep learning using artificial neural networks, which “borrow” concepts from how the human brain works. However, for a LLM to be trained, massive amounts of data are used—meaning if this data is not already in the cloud data center where AI infrastructure is hosted, it must be moved into the cloud, say, from a large enterprise. Given LLMs require massive amounts of training data, especially for AI related to images and videos, edge networks can be quickly overwhelmed. This means substantial upgrades are required for AI to be rolled out at scale. To emphasize the need for edge network upgrades, consider the time to move different dataset sizes into the cloud AI data center for LLM training.

Intra Data Center Networks for Artificial Intelligence

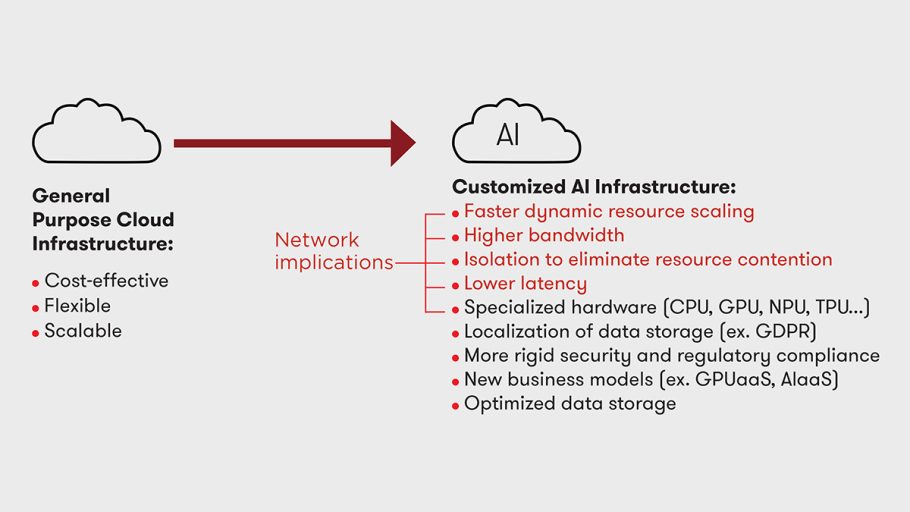

AI was born in data centers that host the traditional cloud services we use daily in our business and personal lives. However, AI storage, compute, and network infrastructure requirements quickly became too complex and demanding for traditional cloud infrastructure for use cases like LLM training, which is the underlying technology of generative AI (GenAI) applications like the widely popular ChatGPT. Traditional cloud infrastructure success is driven by being cost-effective, flexible, and scalable, which are also essential attributes for AI infrastructure.

However, a new and more extensive range of network performance requirements are needed for AI. Today, AI infrastructure technology is mostly closed and proprietary, but the industry has rallied to form new standardization groups, such as the Ultra Ethernet Consortium (UEC) and Ultra Accelerator Link (UALink) Promoter Group, to create a broader technology ecosystem to drive faster innovation through leveraging a more secure supply chain of vendors.

AI applications, such as LLM training that leverages deep learning and artificial neural networks, involve moving massive amounts of data within a data center over short, high-bandwidth, low-latency networks operating at 400 GB/s and 800 GB/s to 1.6 TB/s and higher in the future. Just like customized AI-specific processors like central processing units (CPUs) and graphics processing units (GPUs) are being developed, network technology innovation is also required to optimize AI infrastructure fully. This innovation includes advances in optical transceivers, optical circuit switches (OCS), co-packaged modules, network processing units (NPUs), standards-based UEC and UALink-based platforms, and other networking technologies.

Although these network technology advancements will address AI performance challenges, the massive amounts of associated space and energy consumption will lead to many more data centers being constructed and interconnected. The distances within and between data centers will require different network solutions.

AI Campus Networks

A single modern GPU, the foundational element of AI compute clusters, can consume as much as 1,000 watts, so when tens to hundreds of thousands (and more) are interconnected for purposes like LLM training, associated energy consumption becomes a monumental challenge for data center operators. New AI infrastructure will rapidly consume energy and space within existing data centers. This adaptation will lead to new data centers being constructed in a “campus” where data centers are separated by less than 10 kilometers to minimize latency for improved AI application performance. Campuses will need to be located near available energy that is reliable, sustainable, and cost-effective. Campus data centers will be connected to each other and to distant data centers using optics optimized for specific cost, power, bandwidth, latency, and distances.

Data Center Interconnection (DCI) networks

As AI infrastructure is hosted in new and existing data centers, interconnecting them will be required like they are interconnected today for traditional cloud services. This interconnection will be achieved using similar optical transport solutions, albeit at higher rates, including 1.6 TB/s—an industry first enabled by Ciena’s WaveLogicTM 6 technology. How much new traffic are we talking about? According to a recent analysis from research firm Omdia, monthly AI-enriched network traffic is forecasted to grow at approximately 120 percent (CAGR) from 2023 to 2030. This is a lot of additional traffic for global networks to carry going forward.

For enterprises, AI will drive an increasing need to migrate data and applications to the cloud due to economics, in-house gaps in AI expertise, and challenging power and space limitations. As cloud providers offer AI-as-a-Service and/or GPU-as-a-Service, performing LLM training in the cloud will require enterprises to move huge amounts of training data securely between their premises and the cloud, as well as across different cloud instances. This approach will drive the need for more dynamic and higher speed bandwidth interconnections that require more cloud exchange infrastructure, which represents a new telco revenue-generating opportunity.

Optimized AI Performance at the Network Edge

Once an LLM is properly trained, it will be optimized and “pruned” to provide an acceptable inferencing (i.e., using AI in the real world) accuracy within a much smaller footprint in terms of compute, storage, and energy requirements. These optimized AI algorithms are pushed out to the edge to reduce the strain on core data centers hosting LLM training, reduce latency, and abide by regulations related to data privacy concerns by hosting data locally. Placing AI storage and compute assets in geographically distributed data centers closer to where AI is created and consumed, whether by humans or machines, allows for faster data processing for near real-time AI inferencing to be achieved. This means more edge data centers to interconnect.

Balancing Electrical Power Consumption and Sustainability

AI is progressing at an increasingly rapid pace, creating new opportunities and challenges to address. For example, AI models involving deep learning and artificial neural networks are notoriously power-hungry in their LLM training phase and consume immense amounts of electricity. This demand will only increase as models become more complex and require constantly increasing amounts of compute, storage, and networking capabilities.

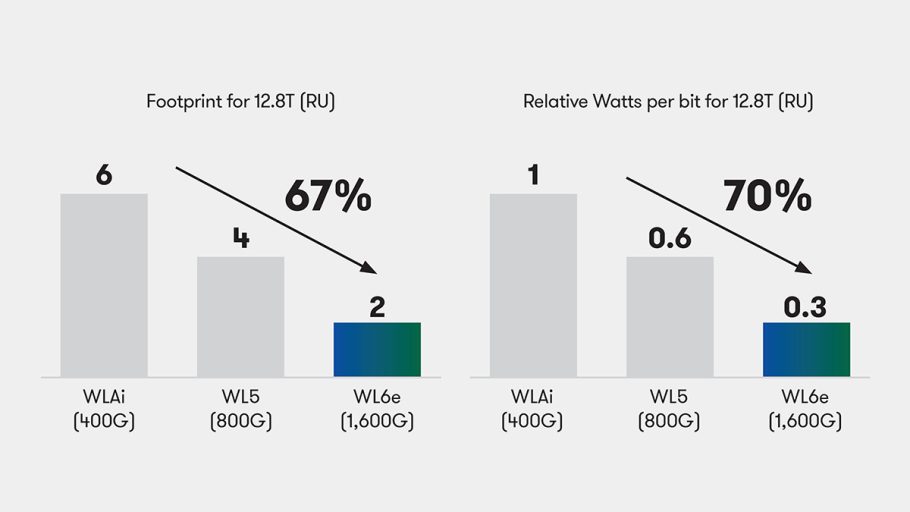

Although AI infrastructure compute and storage consumes far more electrical energy than the networks that interconnect them, network bandwidth growth cannot scale linearly with associated power consumption—it is not sustainable or cost-effective. This means network technology must also consistently reduce electrical power (and space) per bit to “do its part” in an industry so critical to enabling AI capabilities. One example of this relentless technology evolution is Ciena’s WaveLogicTM, which continually increases the achievable spectral efficiency while also reducing the required power per and space per bit.

AI Data Is Only Valuable If It Can Move

Hype aside, AI will provide unprecedented benefits across different industries to affect our business and personal lives positively. However, the rapid and widespread adoption of AI presents a range of new challenges related to its foundational infrastructure in terms of compute, storage, and network building blocks. Successfully addressing these challenges requires extensive cross-industry innovation and collaboration because AI will only scale successfully if data can move securely, sustainably, and cost-effectively from inside core data centers hosting AI LLM training to edge data centers hosting AI inferencing.