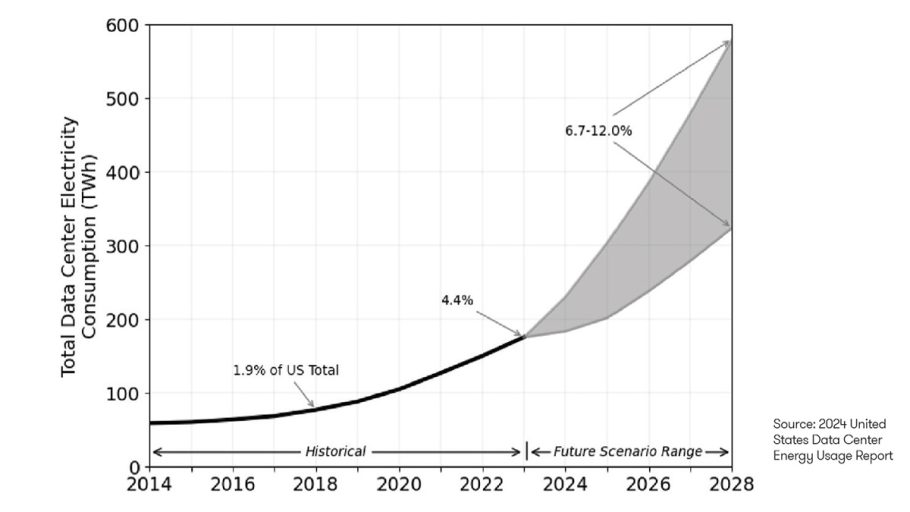

The past couple of years the datacenter industry has seen unprecedented growth due to the AI explosion. However, this step function of demand has put significant strain on the supply chain in every facet of datacenter development. One of the most talked about aspect is powering these datacenters. A DOE backed study shows that, by 2028, data center power in US could reach 6.7-12 percent of the electricity consumption in the US. The problem is no one sees a path to get from where we are today (or just over 4.4 percent) to where we need to be by 2028. As a result there has been a mad rush to cornering the market on powered land. Utilities are getting inundated with requests for interconnection commonly double counting (if not more) the actual capacity that is needed. To top it off, the scale of the requests are soaring getting as high as GW scale. The requests are approved on a case-by-case basis with no regard to competing demands elsewhere on the grid. Interconnection decisions are made in a vacuum and extremely conservatively since no one really knows how the interdependence works in the transmission fabric.

Ultimately there is huge opportunity for a grid orchestrator that can model the grid. One such tool that shows promise is GridCARE (gridcare.ai). Its ability to look at the concentration of electron traffic and help determine the impact on the interconnected grid will be necessary to determine which transmission lines will be saturated if a major additional load is added. Or it can help determine where there is excess of power available. Tools like GridCARE can provide much better strategic insight into where the power is and what the impact of adding load will be. But in the short term, we can also consider minimizing impact on the Grid using “Power Caching.”

Power Caching

What is power caching? Let’s start first looking at what is the definition of caching.

Caching is a system design concept that involves storing frequently accessed data in a location that is easily and quickly accessible.

In other words, the data that is used a lot is stored locally (e.g. RAM) and the data used less frequently is stored far away (e.g. Hard Drive). This is done to improve performance and efficiency in computing. An analogy is in the non computing world is Amazon’s warehouse logistics. To improve their delivery time for prime customers they added small local warehouses with frequently purchased goods that allow same day or next day delivery but their mega warehouses are regionally distributed further away with goods that will take more time. This enables them to eliminate transporatation time and minimize fuel costs and labor. So Amazon is “Caching” frequently purchased goods to get better performance.

Similarly, edge computing has the same purpose of storing frequently accessed data in small local datacenters to be closer to the user thus improving latency. In many cases, carriers even prefer that edge nodes are deployed to relieve congestion and free up data capacity on long haul cables.

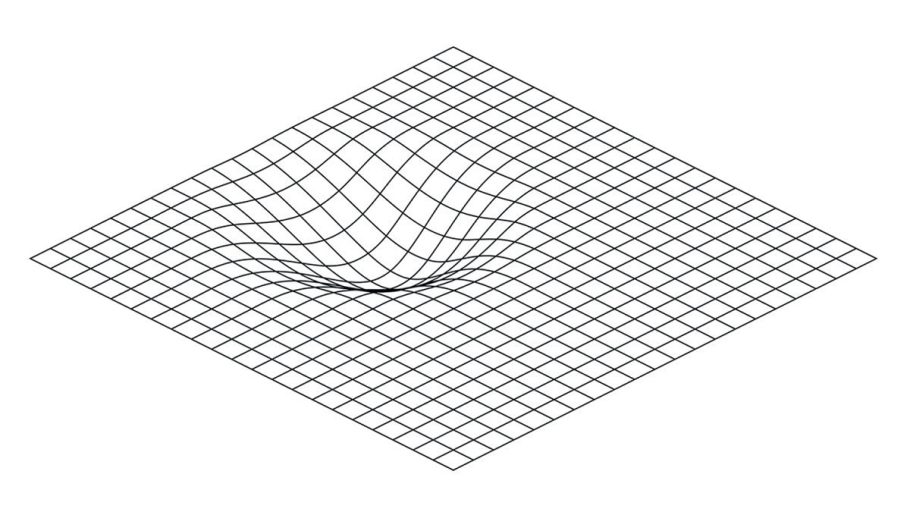

So applying this line of thinking to the grid, we can envision a similar analogy with power. A data center load that is added to the grid with consume huge amounts of electrons (ie power). The grid is the highway for the electrons with certain capacity limitations. With today’s status quo, a datacenter lands in a region with an interconnection to the grid consuming vast amounts of power. Three scenarios could happen:

1 For illustration purposes, let’s envision the transmission grid as a simple plane. For the first scenario, the addition of the datacenter creates a sag or warp in the grid as shown in Figure 1. If there is no power generation added, the rest of the grid would need to compensate and import power to the community around the datacenter. However, this is assuming that the transmission lines actually have the capacity to do so even if there is enough generation on the grid. It is also assuming that no other load will appear and sag the grid driving even more complexity into the system. Bottom line is that with a few additional loads, the grid will be out of capacity and no new loads will not be approved (we see this today).

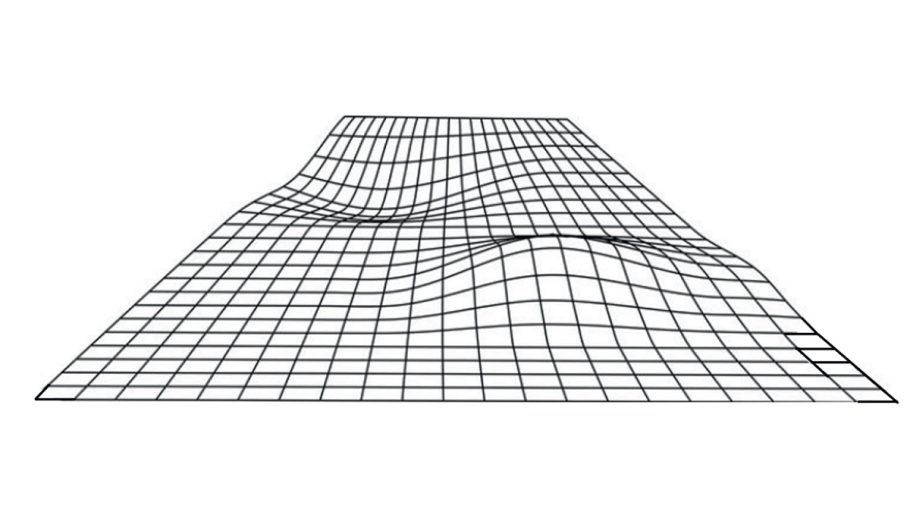

2 To solve this problem in (1), datacenter operators will typically add generation through a PPA to land power somewhere on the grid which is represented by the image in Figure 2. While this approach tends to neutralize the additional load, it will require energy to be moved across distances via transmission lines that may or may not have the capacity. This is what is typically done for renewable PPAs. When loads were small this was not a problem since the incremental power was negligible relative to the transmission capacity. But with larger AI loads this impact is significant creating a nightmare for grid operators since they need to ensure that transmission lines are not at risk to be saturated.

3 Again solving for scenario (1), the addition of the datacenter creates a sag or warp. However, to counter this, one would need to provide local generation at the site to bring the regional grid back to being where it was before the datacenter was there. This is the concept of “Power Caching”. As with networks, edgnodes are created to get the data where it will be consumed and relieve congestion in the network, power caching will put the electrons where they will be consumed and eliminate any impact to the grid. Note that the power plant and the datacenter would still be connected to the grid to use and offer grid services but in the nominal state the datacenter and generation look like they don’t even exist. Scenarios 1 and 2 only make the grid more fragile whereas scenario 3 drives a more robust approach.

In summary, this article was meant to help visualize the impact of datacenters on the grid and hopefully illustrate the need for

1 A more strategic approach to orchestrating the grid interconnections in a holistic manner that will allow grid operators to use the full capacity of the grid while lowering the risk for catastrophic events. The work by GridCARE shows this potential.

2 Using Power Caching to develop datacenters moving forward. The principal of caching always emerges as technologies mature, whether it is computer architecture, edgenodes/networks or even warehouse logistics. For efficiency and performance, caching is always the solution.

ABOUT THE AUTHOR

Christian Belady is highly experienced in managing data center and infrastructure development at global scale. Currently, he is an advisor and board member of several companies in the infrastructure space. Prior to this, Belady served as Vice President and Distinguished Engineer of Datacenter R&D for Microsoft’s Cloud Infrastructure Organization, where he developed one of the largest data center footprints in the world. Before that, he was responsible for driving the strategy and delivery of server and facility development for Microsoft’s data center portfolio worldwide.

With over 150 patents, Belady is a driving force behind innovative thinking and quantitative benchmarking in the field. He is an originator of the Power Usage Effectiveness (PUE) metric, was a key player in the development of the iMasons’ Climate Accord (ICA), and has worked closely with government agencies to define efficiency metrics for data centers and servers. Over the years, he has received many awards, most recently the NVTC Datacenter Icon award, and was elected to the National Academy of Engineering.