How long has Digital Realty been working on data gravity and how was the concept born?

Digital Realty is the world’s largest data center provider in terms of operations and square footage. We sit at a nexus point of being able to see how various types of enterprises and service providers build out infrastructure. This enables us to spot emerging trends very early. One of the trends we identified during the past two years was that enterprises were starting to build these larger data sets. They were doing so on large compute footprints on multiple sites around the world on our platform. As we began to look deeper, we also initiated conversations with the customers to try and understand the cause and effect.

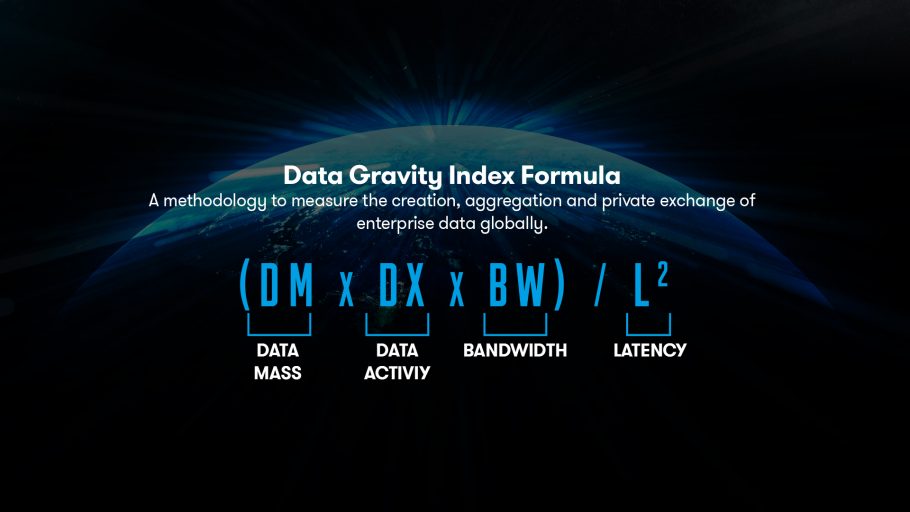

The common theme was data explosion that included where the data was created; how it was aggregated; how it was then enriched with analytics; and, how it was exchanged between many different platforms to a company (internal and external). That was the genesis of the concept of data gravity.

What does the modern digital workplace mean to the enterprise and what are the challenges that need to be addressed? How does PlatformDIGITAL® solve it?

Data is now created at endpoints that are outside of a data center or outside of the cloud itself. Anywhere you have access to a device you are creating data. Therefore, data creation happens at different endpoints that are going to come over enterprises’ corporate networks via WiFi or mobile network. Data has to then be aggregated and managed for compliance purposes, security and performance. Combining these facets, you are going to overhaul an enterprise’s infrastructure architecture.

The previous process to connect the endpoints and backhaul into the data centers or the cloud now has to be inverted. That means bringing everything to the data whether it’s users, applications, clouds or business platforms. This is what we observed and published our findings in the report “Data Gravity Index DGxTM” as an emerging infrastructure trend. Our product roadmap at PlatformDIGITAL® includes aspects of not only connectivity but also data and computing-related issues, where you integrate private enterprise infrastructure with the public infrastructure that enterprises use.

2020 has accelerated the adoption of digital applications. What kind of impact will it have on the future and what role will data gravity play?

This year is definitely creating a new norm on every level. It’s creating a new set of expectations. What used to be a more bounded physical office becomes unlocked. When the world turns back, it will be a mixed model. One that offers the unbounded experience of being able to conduct business, participate in business workflows and be part of the digital workplace from anywhere in the world. Performance, security and scale is now more vital than ever. This will force architectures and infrastructures to be even more critical, grow in size and be rearchitected to accommodate this new paradigm.

With regards to the role of data gravity, what we are seeing is that with digital workplaces and remote working, users are connected to the enterprise from many different places. Users continue to consume applications and services, and interact with many other users. This is the endpoint concept that I talked about earlier. These endpoints will need to interact with other endpoints, which creates a file, message or both. These are both—structured and unstructured—and will lead to an explosion of data that has to be processed, then aggregated and be maintained for compliance purposes.

AI, ML, autonomous cars, connected cars and IoT are all driving massive amounts of data. What are your views on these emerging technologies and how do they impact the data equation?

It is important to note three things: firstly, where the data is being generated; secondly, where it is being processed; and thirdly how those endpoints participate in business workflows. As these emerging technologies—AI, ML, autonomous cars, connected cars and IoT—unlock the ability for more intelligent workflows that can originate within the connected worlds of things, it leads to storage multipliers in terms of volume and variability.

On the other side, what AI underpins is the need to see all data. As a result, this makes the data aggregation piece become very important. The data needs to be cleansed and normalized, followed by analytics that run on top of that.