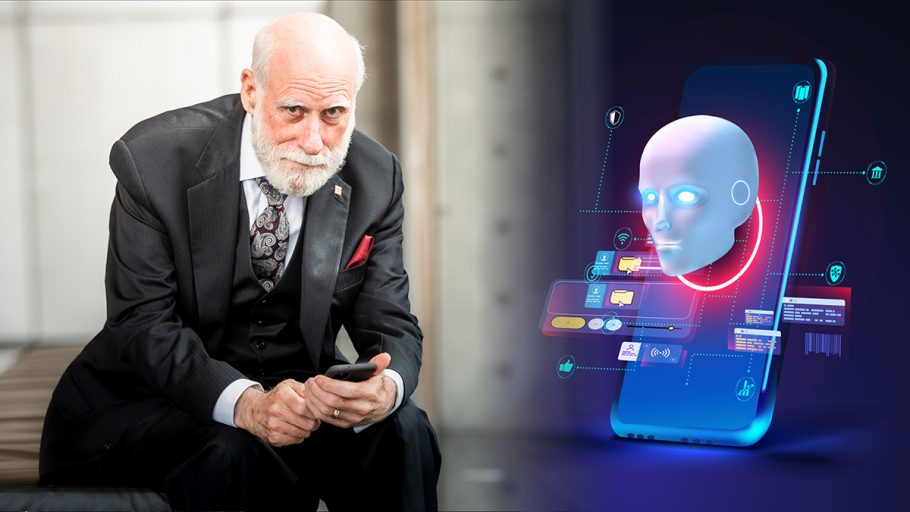

I am not an expert on machine learning (the current hyped brand of artificial intelligence), but, like many, I have tried interacting with large language models (LLMs) that fuel the so-called “chatbots.” The term “chatbot” is fairly accurate if one wishes for pure entertainment. These bots are quite good at generating stories in response to prompting texts of various lengths. I asked one such bot to write a story about a Martian that had gotten into my wine cellar. It wrote about 700 amusing words on how the alien survived by drinking my Napa Valley cabernet sauvignon!

To test the bot’s ability to write factual material, I asked it to produce an obituary for me. I know that sounds macabre, but I assumed that the training material (tons of text off the Internet) would have included the format of an obituary and that there was a fair amount of factual information about me on the Internet (e.g. Wikipedia). The bot did indeed produce a properly formatted obituary that began “We are sorry to report that Vinton G. Cerf passed away…” and it made up a date. It proceeded to render various moments in my career and life history, some of which it got right (birthdate, location), but as it delved into my career, it conflated things I had done with things other people did. When it got to the “surviving family members” part, it made up family members I don’t have. Well, at least, I don’t think I have!

I wondered how this could happen and thought about how the machine learning weights of the bot’s neural network are generated. Imagine for a moment that your biography and mine are found on the same web page. If that coincidence occurred with any likelihood, it is possible that the bot would conclude that there is a correlation between the words on that page and my name—including words that describe your career. While I am speculating here, it seems possible that this kind of conflation is predictable. The bots generate their output token by token, where a “token” is a word or phrase that has a high probability of being the next token in a sentence, based on what has been inputted as prompt or has been generated as output up to that point by the bot.

It should be apparent that this generative process has the potential to misattribute text it finds in proximate association with my name, or perhaps other expressions related to my life, career, and work. Correlation is not always truth, as one can ascertain by noticing the high correlation of flat tires near hospitals catering to baby births. One might conclude that flat tires cause babies while, in fact, it is the other way around. Births cause flat tires because of frantic loved ones driving mothers-to-be to the hospital and hoping to get there before the baby arrives.

While these are overly simplified examples, it is important to appreciate that, even when trained on ostensibly factual material, these accidental and incorrect correlations can be the source of misinformation. Bot-makers are trying to find ways to detect and correct or suppress these kinds of hallucinations (an apparently accepted technical term in the AI community), but the results are still fairly mixed. If I were channeling Freud, I would say that the artificial id and ego of the chatbots are in need of an artificial superego to curb their unbound imaginings.

I have no doubt that bot-makers are working hard to find ways to manage these occasional ravings, but I am somewhat less sanguine about success. Somehow, I hope that bots will be able to cite sources and explain reasoning when making assertions about facts. Provenance of sources will be useful to have in hand. It also seems important that users of these systems are warned that the output is bot-generated. A recent White House announcement cites eight voluntary commitments bot-makers have undertaken to constrain the potential confusion and damage the use of these AI tools might cause. Welcome to the 21st Century!

ABOUT THE AUTHOR

Dr. Vinton G. Cerf is Vice President and Chief Internet Evangelist for Google. Widely known as one of the “Fathers of the Internet,” Cerf is the co-designer of the TCP/IP protocols and the architecture of the Internet. For his pioneering work in this field as well as for his inspired leadership, Cerf received the A.M. Turning Award, the highest honor in computer science, in 2004.

At Google, Cerf is responsible for identifying new enabling technologies to support the development of advanced, Internet-based products and services. Cerf is also Chairman of the Internet Ecosystem Innovation Committee (IEIC), which is an independent committee that promotes Internet diversity forming global Internet nexus points, and one of global industry leaders honored in the inaugural InterGlobix Magazine Titans List.

Cerf is former Senior Vice President of Technology Strategy for MCI Communications Corporation, where he was responsible for guiding corporate strategy development from the technical perspective. Previously, Cerf served as MCI’s Senior Vice President of Architecture and Technology, where he led a team of architects and engineers to design advanced networking frameworks, including Internet-based solutions for delivering a combination of data, information, voice, and video services for business and consumer use. He also previously served as Chairman of the Internet Corporation for Assigned Names and Numbers (ICANN), the group that oversees the Internet’s growth and expansion, and Founding President of the Internet Society.